How Cybercriminals Are Using AI To Leverage Social Engineering Strategies

Cybercriminals are using AI to leverage social engineering strategies. To be fair, everyone was promised that artificial intelligence would benefit their business. But some businesses benefit more than most.

For now, bad actors in the business of defrauding millions if not billions of dollars are reaping the biggest rewards. Cyberattacks are expected to cost the global economy US$10.5 trillion by the end of the year.

It’s all over mainstream media. Following on from Microsoft’s sob story about Russians trying to steal its source code, legacy media has drummed up yet another Us vs. Them story.

In an advertorial for a couple of cybersecurity firms, CNBC On MSN reported that cybercriminals are using AI to leverage social engineering strategies in ways that bring down the ‘guardrails.’

They don’t bring down the guardrails if you’re paying attention. Yes, AI-powered cyberattacks appear more genuine, but if you know they exist, you can defend yourself.

So ignore sensationalist headlines. I would give you some examples, but reciting headlines is forbidden under copyright laws that empower mainstream media outlets to protect anyone from re-using their headlines (because that’s how established media roll).

Would you like to read some true stories?

AI and Social Engineering

First of all, let’s take a sneaky peek at how cybercriminals use AI to leverage social engineering in highly targeted attacks.

Because AI-powered tools are becoming more sophisticated in understanding and generating human-like responses, cyberattacks are more convincing in social engineering scenarios as well.

Various techniques can easily manipulate unsuspecting individuals into divulging confidential information or performing actions that compromise security.

On the flip side, suspicious individuals can easily detect and divert a nefarious threat. And I intend to explain how in this article. We will look at how bad actors are using sophisticated strategies to impersonate people, brands and chatbots using AI-powered tools.

The new-generation phishing attacks analyse massive amounts of data to craft highly convincing and personalised messaging. Phishing emails and fake social media ads use scraped information that is available in the public domain, such as social media platforms, websites, brand content and public records hosted by government departments.

According to cybersecurity experts, cybercriminals are not simply using ChatGPT. The criminal underworld has developed large language models they rent out to petty cybercriminals who do not speak English as their first language or people who are not so hot with spelling and grammar.

Social engineering strategies typically involve requests to transfer money, confide sensitive information such as a password or purchase merchandise that does not exist.

Let’s take a look at how cybercriminals are using AI to leverage social engineering strategies.

Deepfake

Deepfake technology presents significant challenges for cybersecurity and social engineering attacks.

Bad actors use AI algorithms to analyse and learn from a large dataset of real images or videos, allowing them to generate content that looks and sounds authentic.

Basically, AI can create highly realistic videos or telephone calls that appear to be, can be used for social engineering. Cybercriminals can create videos of CEOs or other executives giving instructions to employees to transfer funds or sensitive information.

Deepfake technology can create videos or audio recordings of realistic conversations between individuals who have never actually interacted. Using voice cloning algorithms that analyse the nuances of speech patterns, intonation, and accent to create a convincing replica of a person’s voice, cybercriminals can use the datasets to fabricate conversations between executives, employees, or other trusted individuals, leading to misinformation or false instructions.

Hackers recently swagged over $25m from a Hong-Kong-based IT company using deepfake strategies. An unsuspecting clerk took orders from a London-based CFO to make a money transfer.

That expensive video call could easily have been avoided if the company deployed a zero-trust policy. And to be perfectly honest, I’m shocked that an IT firm in London with the financial clout to transfer $25m does not have a zero-trust policy.

Brand Impersonation

Brand impersonation can tarnish a company’s reputation. And because hackers, or rivals, can use AI to mimic a brand, the continuity of businesses of all sizes could be fragile.

The defence against brand impersonation is making sure your employees, your customers and your stakeholders know how to identify authentic platforms, links and email addresses used by your company. Brand impersonation is batted off with brand information.

Hackers use AI to generate realistic content for fake websites, including text, images, and even entire web pages. Domain Generation Algorithms closely mimic legitimate brand names — but cannot completely replicate every single detail. They might get close, but they cannot be exact. The design of the content can be copied, but URLs and email addresses cannot.

Brand impersonation could make it difficult for growing brands to attract and accumulate new customers. Hackers use AI to create fake social media profiles that appear legitimate and bots can engage with users on social media, spreading misinformation, promoting scams, or directing users to malicious websites.

If your company name is used to scam unsuspecting consumers, it could damage your reputation, and at some point, consumers may stop clicking on your online ads altogether.

It will be interesting to see how Facebook et al combat this issue given a large percentage of their model relies on advertising revenue. In the meantime, brands should create an anti-hacking campaign that promotes awareness and informs consumers how to identify legitimate communications stemming from their business.

Ecommerce brands can come undone by hackers using AI to create fake product reviews, ratings, and recommendations on e-commerce platforms or review sites. These fake reviews can influence purchasing decisions.

Impersonation Misinformation

Cybercriminals are not only using AI to impersonate brands in social engineering strategies. Influential individuals are being cloned as well.

Deepfake technology is being used to impersonate trusted individuals in targeted social engineering attacks on social media. Attackers can create personalised videos or audio recordings based on the victim’s profile, making the deception more convincing.

This strategy is generally aimed towards the general public. A recent example saw an attack on ‘Swifties’ (Taylor Swift fans for the uninitiated) that may be interested in purchasing Le Creuset cookware their favourite pop star is enamoured by. Fake ads appeared through nefarious Facebook ads.

AI was used to generate an ad endorsing the company’s products. The singer appeared to feature in a video dubbed with a synthetic version of her voice.

The concern emerging from the halls of cyber and national security establishments is that deepfake videos can be used to impersonate political figures, celebrities, or public figures and spread false information.

It wouldn’t be difficult for cybercriminals to create deep fake videos of political figures, saying or doing things they never actually did. These videos can go viral on social media, leading to confusion or chaos and potentially sway public opinion in the build-up to an election.

There is also every chance that politicians and protected celebrities can use this impersonation misinformation excuse to deny they did something they actually did. The best solution is not to believe anything.

Chatbots and Conversational AI

Cybercriminals are leveraging AI-powered chatbots to engage with users in online conversations. The general modus operandi is to engage with victims on social media or messaging platforms to gather information or deceive them into clicking malicious links.

Scammers create chatbots that mimic the appearance and behaviour of legitimate customer support or technical support agents. These bots interact with users on websites, social media, or messaging apps, attempting to gather sensitive information such as login credentials or payment details.

For example, targets may receive messages claiming their account has been cancelled or suspended due to unusual action or their device has a virus or security issue. The chatbot guides them through steps to “fix” the problem, which often involves clicking on a malicious link which instals malware or granting remote access to the device.

As we’ve mentioned in previous articles, the easiest way to avoid clicking on malicious links is by hovering your mouse over the link to determine if the URL is authentic. If you’re unsure, quarantine the email and follow up with the company in that sent it to ask about the legitimacy of the email.

You will only ever be suspicious of these types of phishing emails if you are a customer of the company and the message could be genuine.

Polymorphic Malware

Polymorphic malware changes its code with each infection, making it difficult for traditional antivirus programs to detect. This allows it to bypass signature-based detection methods.

Threat actors are using AI algorithms and automated software to generate code obfuscation techniques. This includes transforming the code’s structure, renaming variables, and adding meaningless instructions.

Polymorphic malware can use AI to dynamically change its obfuscation techniques at runtime, making it harder for traditional security tools to analyse and detect the malicious code.

AI algorithms also learn from security software patterns and signatures to generate code that does not match any known signatures and can behave like existing software in sandbox environments (used for testing malware behaviour) to avoid detection once the malware enters a system.

This could be quite easy for hackers to do because software develops vulnerabilities. Not only that, companies are obliged to make the vulnerability public when they roll out the software patch.

Basically, vulnerability research conducted by software companies actually makes their customers more vulnerable to cyberattacks. Whilst you can’t operate your business without software, AI-powered malware increases the urgency to update security patches at the earliest possible moment.

If patch management solutions were not important before (they were), they are even more important now!

Polymorphic malware can be used as a payload delivery mechanism for other malicious activities, such as ransomware or spyware.

Once the polymorphic malware infects a system, it can download and execute additional payloads without detection or to steal sensitive data from businesses, such as customer information, financial records, or intellectual property.

Quishing

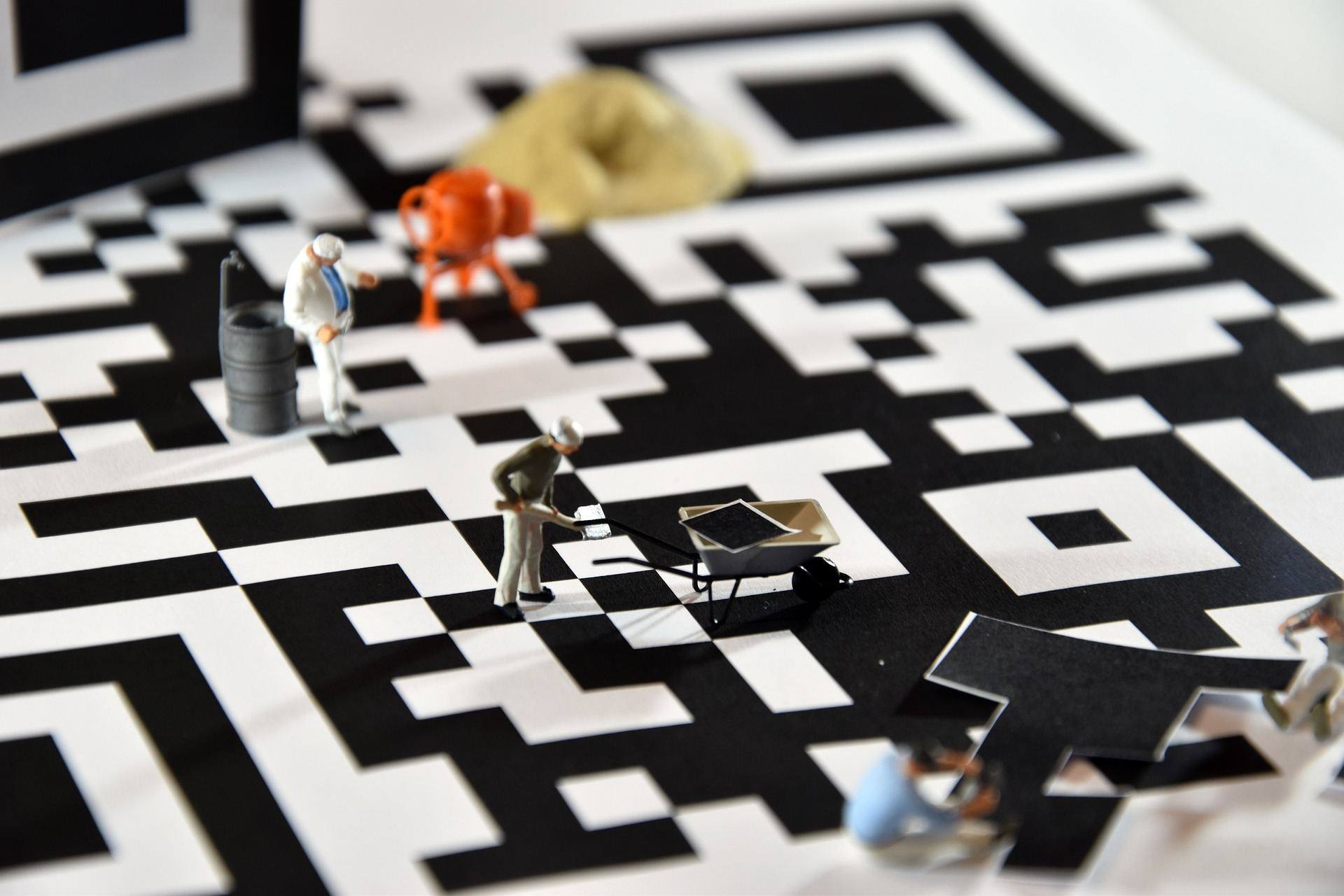

Quishing is a term to denote phishing scams conducted through fake QR codes.

In the last couple of years, malicious QR codes have been creeping increasingly into nefarious activities. Scam codes have appeared in paper junk mail, stickers on public parking meters, event tickets, phishing emails and online ads promoting fake promotions, discounts, or giveaways.

Hackers use AI to create fake URLs embedded in QR codes. These URLs lead to phishing websites designed to steal sensitive information such as login credentials or credit card details.

AI can dynamically generate QR codes with randomised URLs, making it difficult for security measures to blacklist or block them. They also obfuscate the malware payload within the QR code, making it harder for traditional antivirus programs to detect.

The good news is that QR code scams are easy to defend against. Don’t use QR codes in a way that bad actors can mimic. Scanning a QR code alone does not infect a person’s phone. A crime can only take place if the individual clicks on a malicious link on a fake website or if they enter personal details such as a debit card number.

In addition, inform your consumers that you either do not intend to use QR codes or if you do how you intend to use them. Educate your customers and make rules they can follow that enable them to verify that a QR code is associated with your brand. For example, a QR code is only accessible on specific pages with a verifiable URL.

Identify Social Engineering Strategies

The hype around cybercrime may be real, but many of the strategies dreamed up by cybersecurity firms for marketing campaigns to buy their software. And the advertising is pushed through legacy media that rely on advertising fees in the same way social media platforms do.

Our knowledgeable IT support specialists in London have strategies that are far more effective than software solutions alone and far more effective in combatting the social engineering strategies cybercriminals can fabricate with AI.

If you want to save money and your brand reputation, get in touch with our IT support team in London today and we will show you the path to a secure and fruitful future.